Power Real-Time Intelligence and AI

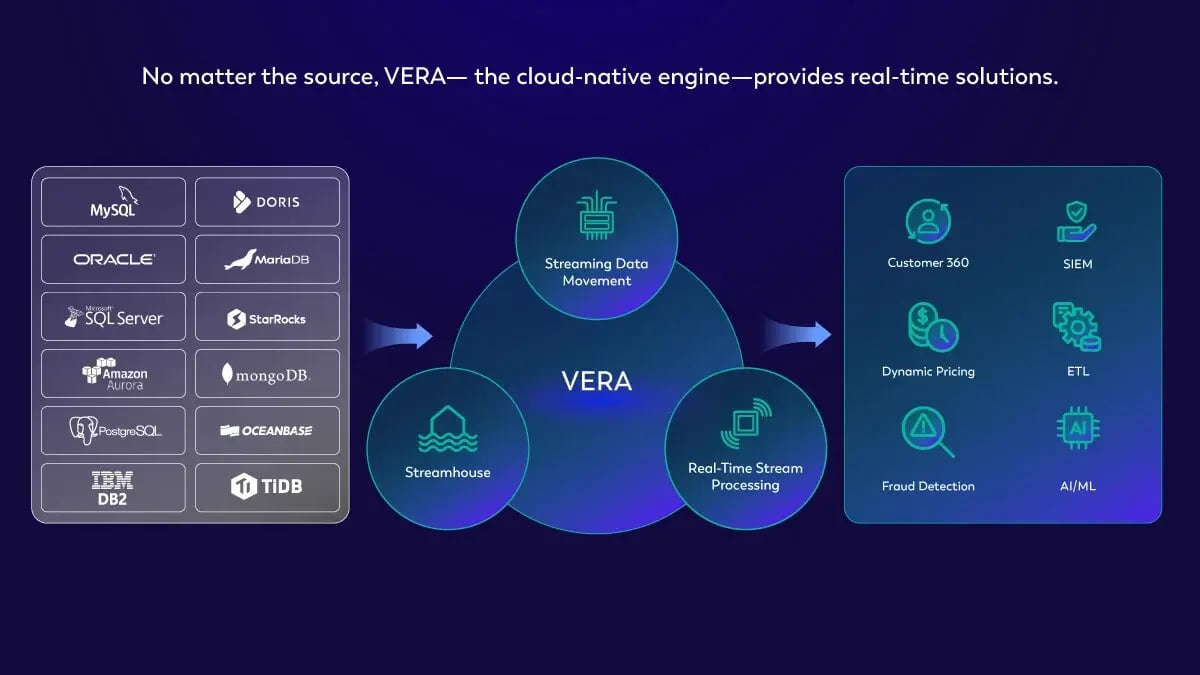

Meet the Unified Streaming Data Platform powered by VERA, the cloud-native engine built to revolutionize Apache Flink®.

Own Your Now

35 000 +

Jobs running on a single cluster

>10 Petabytes

Data ingested per day

>2 Million

Cores operated in an elastically scaling cluster

6.9 Billion

Records processed per second

10 Trillion

Records ingested per day

Brought to you by the original creators of Apache Flink®

Brands You Know Trust Ververica

Unified Streaming

Data Platform

Derive insights, make decisions, and take actions with data from any source.

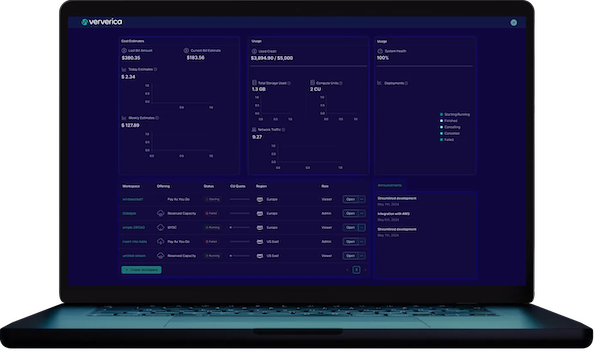

Benefits of Ververica's Unified Streaming Data Platform

Developer Efficiency

Maximize performance and productivity while minimizing resource use with enterprise-grade, flexible tools

Operational Excellence

Focus on delivering business value rather than operating your streaming data environment

Security

Give the right people access to the right data at the right time

Data Governance

Get an organized, secure, and consistent view of your data

Multi-Tenancy

Isolate workspaces, separate production and test environments. Rapidly provision and de-provision resources on demand

Elasticity

Automatically and dynamically scale resources and storage up or down as needed, based on the current workload and capacity

Deploy Anywhere, Anytime

Deploy on-premise, on Ververica Cloud or get the best of both worlds with a zero-trust Bring Your Own Cloud (BYOC) deployment

Ververica Platform:

Self-Managed

Ververica Cloud:

Managed Service

Ververica Cloud:

Bring Your Own Cloud

Source Data & Events

Customer

Customer

Customer

Source Integration

Unified Streaming Data Platform

VERA Engine

Public Cloud

Customer

Private Cloud

Customer

Sink Integration

Destination Data

and Events Sink Integration

Customer

Customer

Customer

Brands You Know Trust Ververica

Booking.com, a leading travel ecosystem serving both partners and travelers, faced challenges with processing security data streams and orchestrating Flink applications with stateful upgrades and multi-tenancy requirements. Their previous approach proved cumbersome and inadequate for their needs.

Cash App, a provider of consumer financial services, highlights the transformative outcomes of integrating with Ververica’s software. With Ververica's solution, Cash App streamlined deployments, gained visibility into Flink jobs, and empowered teams with customizable features.

Ready to watch a demo or talk to a live expert?

The Ververica Team is here to help.

Latest News

A World Without Kafka

Why Kafka Falls Short for Real-Time Analytics (and What Comes Next) Apache Kafka had a remarkable... January 8, 2026 by Alex Campos

Introducing The Era of "Zero-State" Streaming Joins

Large-scale streaming joins, real-time data enrichment, and continuous analytics have historically... November 19, 2025 by Giannis Polyzos

Ververica Platform 3.0: The Turning Point for Unified Streaming Data

The world of data has been changing rapidly. In 2025, businesses need to make decisions in real... October 15, 2025 by Vladimir JandreskiAdditional Resources

Pricing

Ververica's Unified Streaming Data Platform pricing and support details for each deployment.

See PricingVerverica Academy

A comprehensive learning platform that offers a wide range of courses and educational resources.

Learn Apache FlinkVerverica Documentation

Your go-to resource for getting the most out of Ververica's Unified Streaming Data Platform.

Read DocsLet’s Talk

Ververica's Unified Streaming Data Platform helps organizations to create more value from their data, faster than ever. Generally, our customers are up and running in days and immediately start to see positive impact.

Once you submit this form, we will get in touch with you and arrange a follow-up call to demonstrate how our Platform can solve your particular use case.