What is Streamhouse?

Bridging the Gap Between Real-Time and Historical Data

Businesses are constantly seeking more efficient and cost-effective ways to harness their vast amounts of data. The traditional divide between batch processing for historical data and stream processing for real-time insights often leads to complex, siloed, and expensive data architectures. This is where Streamhouse, also known as Streaming Lakehouse, emerges as a transformative solution. At its core, Streamhouse is Ververica's innovative approach that unifies batch and stream processing, allowing organizations to achieve near real-time results on their data lake while maintaining cost-efficiency and simplifying their data infrastructure.With Streamhouse, businesses can get the best of both worlds: accessing the power and cost-effectiveness of their traditional Data Lakehouse at the speed of real-time streaming. With Streamhouse, you can fill the gap between traditional streaming and batch architectures, getting stream processing capabilities while maintaining (near) real-time results on the Data Lake.

Why Streamhouse? The Evolution of Data Architectures.

For years, organizations have grappled with the challenges of managing increasingly large and diverse datasets. The rise of machine learning (ML), artificial intelligence (AI), and the escalating demand for real-time decision-making intensify the need for immediate data insights. Traditionally, this led to two distinct data paradigms:

- Data Warehouses: Optimized for structured data and complex analytical queries, but often expensive and not well-suited for unstructured data or real-time ingestion.

- Data Lakes: Excellent for storing vast amounts of raw, diverse data at low cost, but typically required separate processing engines for different data types and lacked strong ACID (Atomicity, Consistency, Isolation, Durability) guarantees.

- Real-Time Streaming Architectures: Built for low-latency processing and immediate insights, essential for event-driven architecture, but can be expensive and resource-intensive to maintain at scale.

While Data Lakehouses bridge the gap between data warehouses and data lakes, offering cost-effective storage with analytical capabilities, they still primarily focus on batch processing and struggle to deliver real-time insights effectively. This creates a significant void: businesses need a solution that seamlessly unifies real-time and batch processing without compromising on performance, cost, or complexity.

This unaddressed need is the key driver behind the Streamhouse evolution. Ververica recognizes that to truly empower businesses, a solution is required that provides fast, fresh data while leveraging the cost benefits of data lakes. Streamhouse capabilities address these limitations, simplifying architecture, reducing operational complexity and infrastructure costs, and empowering businesses to harness the full potential of their data. As explored in the Streamhouse Evolution blog, it is about moving beyond the "deadlock" of separate systems to a cohesive, unified platform.

What is Streamhouse? The Core Concept

The Streamhouse concept is straightforward yet revolutionary: it combines the best aspects of traditional Data Lakehouses with the agility and speed of real-time streaming. It's designed to process and analyze vast amounts of data in real-time while simultaneously allowing for deep analytical queries on historical data, all within a single, integrated platform.

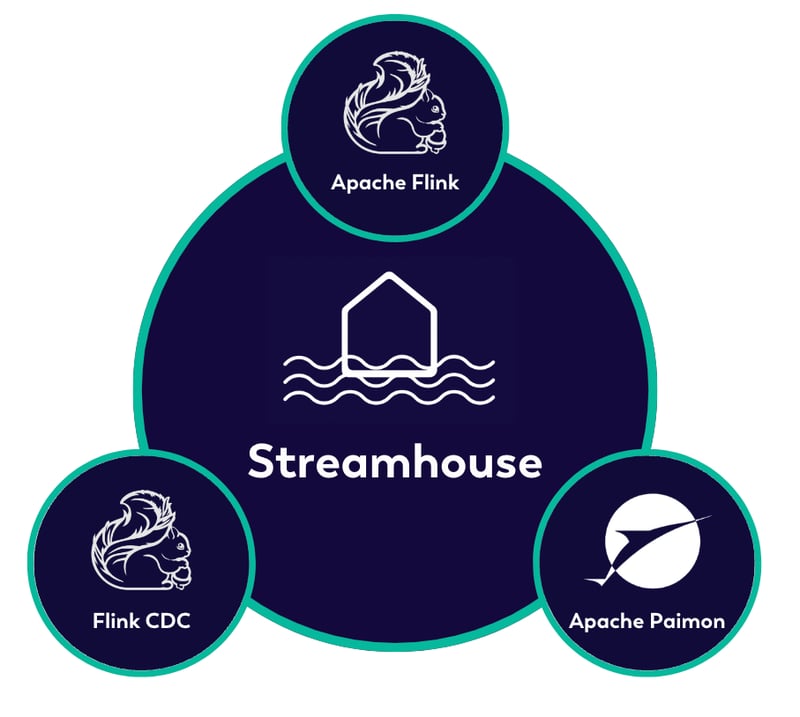

At its technical heart, Ververica’s Streamhouse capabilities leverage a powerful combination of open-source technologies:

- Apache Flink for Unified Compute: Apache Flink is a powerful Open Source stream processing framework for data stream processing and distributed stateful computations on large-scale data streams. In Streamhouse, Flink serves as the unified compute engine, capable of processing both continuous streams and historical batch data. This allows for flexible and powerful stream processing with Apache Flink.

- Flink Change Data Capture (CDC) for Unified Ingestion: Flink CDC enables efficient and real-time capture of data changes from various operational databases (like MySQL or Postgres) directly into the Streamhouse. This ensures that data is ingested and made available for processing almost instantaneously.

- Apache Paimon for Unified Lakehouse Storage: Apache Paimon provides a streaming storage layer that allows Flink to perform stream processing directly on the data lake. Paimon offers ACID transactions, schema evolution, and efficient data versioning, bringing data warehouse capabilities to the low-cost, scalable data lake. Its role is crucial in enabling the unified approach to data management within Streamhouse.

This powerful synergy allows Streamhouse to bridge the cost/latency gap, providing near real-time results on the data lake by merging the cost-efficiency of data lakes with the speed of real-time data.

Key Benefits

Ververica’s Streamhouse is part of the Unified Streaming Data Platform, and features several key value propositions that make it a compelling technology to address modern data challenges:

- Significant Latency Improvement: Streamhouse offers a substantial reduction in latency, transforming high-latency batch jobs (which might take days) to near real-time results (within minutes). This dramatic improvement is crucial for businesses that rely on fresh data for timely decisions.

- Flexibility with Flink SQL: By leveraging Flink SQL, users gain the flexibility to seamlessly transition between Streamhouse capabilities and pure real-time streaming based on their evolving business requirements. This adaptability ensures that the solution can grow and change with an organization's needs.

- Cost-Effectiveness and ROI Assessment: Streamhouse provides a more efficient, cost-effective, and low-risk method for system upgrades. It allows businesses to assess suitability and ROI by running operations and gauging results before committing to full real-time streaming, offering a balanced approach between cost and latency.

- Seamless Migration: The unified Flink engine and Flink SQL facilitate seamless migration, minimizing the effort involved in upgrading from traditional Lakehouse architectures to Streamhouse and potentially to full real-time streaming pipelines.

- Optimized Performance and Resource Efficiency: Streamhouse can significantly cut costs compared to always-on real-time streaming for use cases where minute-level data latency is acceptable. Furthermore, employing streaming preprocessing within Streamhouse yields five times better write performance and eight times better query performance compared to traditional batch Lakehouses..

- Enhanced Data Freshness: By continuously processing and updating data on the data lake, Streamhouse ensures that analytical queries always reflect the freshest available information, making it an ideal choice for data stream processing needs.

- Simplified Architecture: Consolidating batch and stream processing into a single platform reduces architectural complexity, making systems easier to build, maintain, and scale.

Common Data Processing Patterns Enabled by Streamhouse

Because Streamhouse combines the capabilities of Apache Flink, Flink CDC, and Apache Paimon, it enables a variety of crucial data stream processing patterns directly on the data lake. These patterns are designed to provide near real-time results and significantly reduce engineering maintenance efforts:

- Event Deduplication: Eliminating duplicate events is critical for data quality. Streamhouse achieves this using Apache Paimon's deduplicate and first-row merge engines, ensuring data integrity.

- Table Widening: The partial-update merge engine in Paimon allows users to update specific columns of a record through multiple updates without resorting to expensive streaming joins, streamlining data enrichment processes.

- Out-of-Order Event Handling: Real-world data often arrives out of sequence. Streamhouse addresses this by allowing users to specify sequence fields and sequence groups, guaranteeing data correctness even when events arrive late.

- Automatic Aggregations: For business analysis, Streamhouse supports automatic aggregations, making it easier to derive summary insights from large datasets.

- Time Travel Capabilities: Leveraging snapshots and tags, Streamhouse allows users to query previous versions of data, which is invaluable for auditing, debugging, and historical analysis.

- CDC Data Lake Ingestion with Schema Evolution: Streamhouse provides robust integration with CDC connectors for various databases, automatically handling schema changes as data evolves, ensuring seamless data ingestion into the data lake.

- Data Enrichment with Lookup Joins: Streamhouse supports data enrichment by building RocksDB indexes within Paimon for improved performance and allowing asynchronous lookups with retries for late-arriving records.

Building Real-Time Data Views with Streamhouse

One of the most compelling applications of Ververica’s Unified Streaming Data Platform Streamhouse capabilities is its ability to facilitate the creation of real-time data views. This is achieved through a streamlined, three-stage pipeline: ingestion, aggregation, and visualization.

- Ingestion: Data from diverse sources, including message queues like Kinesis and Kafka, or relational databases via Flink CDC, is efficiently ingested into the Streamhouse environment. This data is then stored in Paimon tables. Notably, "Append Only" Paimon tables can even replace message queues for intermediate data ingestion, offering a more persistent and queryable storage layer.

- Aggregation: Once ingested, data is joined and processed to build aggregated data tables. For instance, you can calculate revenue per country by joining order and customer data. Paimon's features, such as merge-engine=aggregation and changelog-producer=full-compaction, enable automatic aggregation and efficient change log management, ensuring that your aggregated views are continuously updated.

- Visualization: The processed and aggregated data is then presented to users in real-time. This can be achieved by integrating with popular business intelligence (BI) tools or by utilizing the Paimon Java API to build custom web applications that stream updates continuously, providing truly live dashboards and reports.

The benefits of using Streamhouse for real-time data views are extensive, including continuous real-time processing, efficient storage on cheap services like S3 with data warehouse properties, simplified data ingestion via Flink CDC, enhanced performance thanks to Ververica's VERA engine (which offers 2x better performance for real-time data processing), and remarkable flexibility in visualization. This process is detailed in the Building Real-Time Data Views with Streamhouse blog.

Streamhouse and the Broader Data Landscape

The Streamhouse concept represents a significant step towards a truly unified data architecture. By bringing stream processing capabilities directly to the data lake, it eliminates the need for separate systems for real-time and historical analytics. This convergence is vital for organizations moving towards an event-driven architecture, where immediate reactions to changes in data are paramount.

The integration of Apache Flink as the compute engine and Apache Paimon as the storage layer positions Streamhouse as a robust and scalable stream processing technology and addition to the Unified Streaming Data Platform. It allows businesses to gain instant access to insights, power real-time applications, and conduct deep historical analysis without the complexities and costs associated with maintaining disparate systems.

Conclusion

Streamhouse is more than just a new buzzword; it's a practical and powerful technological concept for the modern data challenges. By combining the strengths of Apache Flink for stream processing with the cost-efficiency and analytical capabilities of data lakes via Apache Paimon, Ververica has crafted a platform that truly unifies data stream processing. It enables businesses to move from batch-oriented insights to a world of real-time decision-making, all while simplifying their data infrastructure and optimizing costs. For any organization looking to achieve true data agility and harness the full potential of its streaming and historical data, exploring the components of the Unified Streaming Data Platform, including Streamhouse is an essential next step.