The Release of Flink CDC v2.3

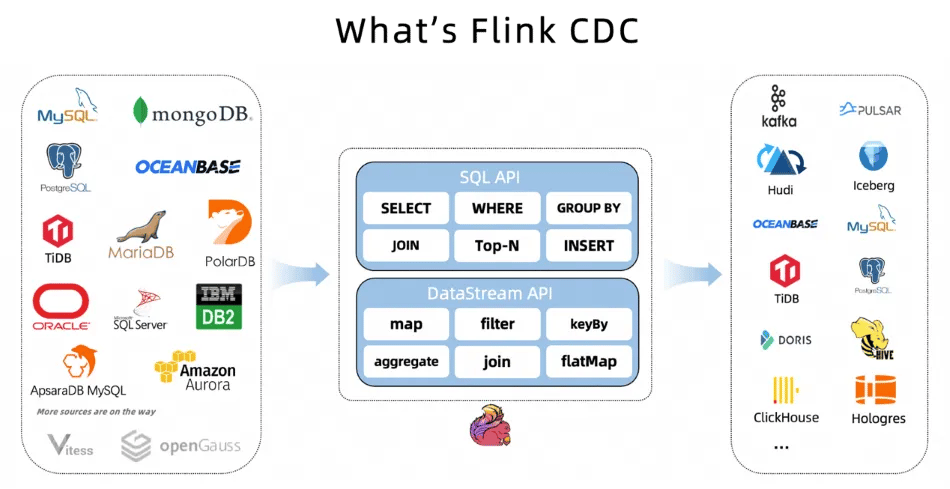

Flink CDC is a change data capture (CDC) technology based on database changelogs. It is a data integration framework that supports reading database snapshots and smoothly switching to reading binlogs (binary logs thatcontain a record of all changes to data and structure in the databases). This is useful for capturing and propagating committed changes from a database to downstream consumers and helps keep multiple datastores in sync and avoiding dual writes. With powerful Flink pipelines and its rich upstream and downstream ecosystems, Flink CDC can efficiently realize the real-time integration of massive data.

As a next generation real-time data integration framework, Flink CDC has technical advantages such as lock-free reading, parallel reading, table schema auto synchronization, and distributed architecture. It also has its own standalone documentation which you can find here. The Flink CDC community has grown rapidly since Flink CDC became open source more than 2 years ago and currently has 76 contributors, 7 maintainers, and more than 7800 users in the DingTalk user group.

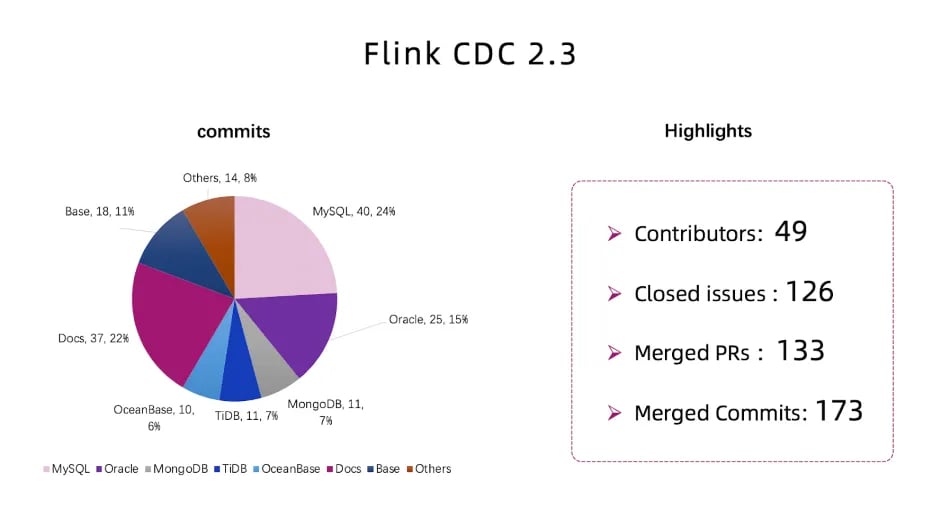

With joint efforts from the community, Flink CDC 2.3.0 was officially released.

From the perspective of code distribution, we could see both new features and improvements in MySQL CDC, MongoDB CDC, Oracle CDC, incremental snapshot framework (flink-cdc-base), and the document module.

With so many improvements and features, this blog post will go over the major improvements and core features in this release and what the future holds.

Key Features and Improvements

For the purpose of this post, we will explore the four most important features of this release.

Introduction of the DB2 CDC Connector

DB2 is a relational database management system developed by IBM. The DB2 CDC connector can capture row-level changes of tables in the DB2 database. DB2 enables SQL Replication based on the ASN Capture/Apply agents, which generates a change-data table for tables in capture mode, and stores change events in the change-data table. The DB2 CDC connector first reads the historical data in the table through JDBC, then reads the incremental change data from the change-data table.

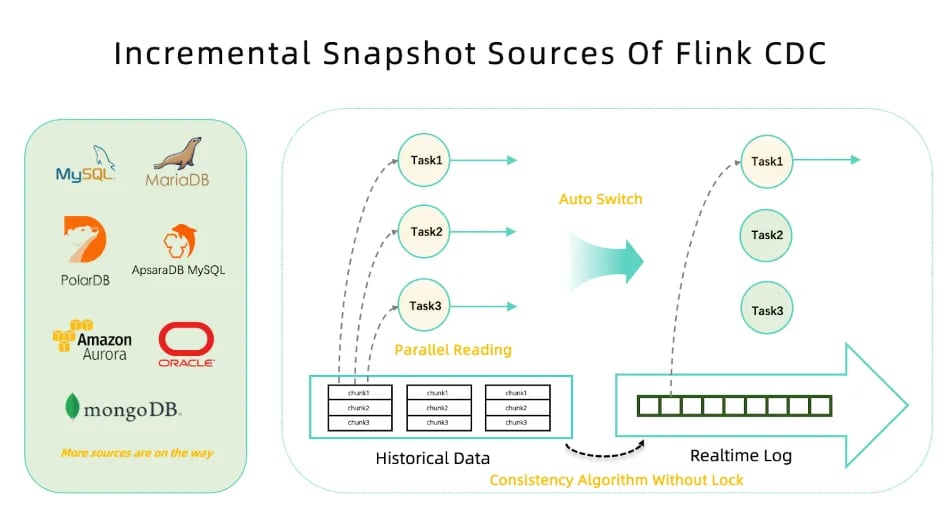

Incremental Snapshot Algorithm Support from MongoDB CDC and Oracle CDC Connectors

In Flink CDC version 2.3, the MongoDB CDC connector and Oracle CDC connector are docked into the Flink CDC incremental snapshot framework and implement the incremental snapshot algorithm. This means that now they support lock-free reading, parallel reading, and checkpointing. Now, we have more Flink CDC sources supporting incremental snapshot algorithms. The community is also planning to migrate more connectors to the incremental snapshot framework in the future.

Stability Improvements to the MySQL CDC Connector

As the most popular connector in the Flink CDC project, the MySQL CDC connector introduces many advanced features in version 2.3, and has many improvements on performance and stability.

- Support starting from specific offset

This connector now supports starting jobs from the specified position of the binlog. You can specify the starting binlog position by timestamp, binlog offset, or binlog gtid. You can also set it to start from the earliest binlog offset.

- Optimization of chunk splitting algorithm

You can now optimize the chunk splitting algorithm in the snapshot phase. The current synchronous algorithm is changed to asynchronous, and you can choose a column in the primary keys as the split column of the chunk splitting algorithm. The splitting process supports checkpointing, which resolves the performance problem caused by synchronous chunk splitting blocking in the snapshot phase.

- Stability improvements

The connector now supports mapping all character sets to Flink SQL, which unlocks more user scenarios. It can handle default values in different types to improve job tolerance on the irregular DDL, and automatically obtains the time zone of the database server to solve time zone problems.

- Performance improvements

This version focuses on optimizing memory and read performance, reducing memory usage of the JobManager and the TaskManager through improvements on meta multiplexing in the JobManager and streaming reading in the TaskManager. At the same time, binlog reading performance is improved by optimizing binlog parsing logic.

Other improvements

- Flink CDC version 2.3 is compatible with the four major versions of Flink (1.13, 1.14, 1.15, 1.16). This greatly reduces upgrade and maintenance costs for users.

- OceanBase CDC connector fixes the time zone problem, maps all data types to Flink SQL, and provides more options for a more flexible configuration, such as the newly added "table-list" configuration for reading multiple OceanBase tables.

- MongoDB CDC connector supports more data types and optimizes the filtering process of capture tables.

- TiDB CDC connector fixes the data loss problem with switching after the snapshot phase and supports region switching during reading.

- Postgres CDC connector supports geometry type, more options are added, and changelog mode can be configured to filter data.

- SQL Server CDC connector supports more SQL Server versions and refines the document.

- MySQL CDC and OceanBase CDC connectors include documentation in Chinese as well as video tutorials for OceanBase CDC connectors.

Future Plans

The development of Flink CDC could not be achieved without contributions and feedback from the community and the open source spirit of maintainers. Currently, the Flink CDC community is already making plans for version 2.4. All users and contributors are welcome to participate and provide feedback. The main direction of the project will be from the following aspects:

- Perfect data source - We plan to support more data sources and migrate more connectors to the incremental snapshot framework to unlock lock-free reading and parallel reading.

- Observability improvements - We want to provide a reading rate limiting function to reduce the query pressure on the database during the snapshot phase. The new version will provide more abundant monitoring indicators to let users obtain indicators related to task progress and monitor task status.

- Performance improvements - The snapshot phase supports the use of batch mode in the new version, which will improve performance in the snapshot phase and release idle readers’ resources automatically after the snapshot phase.

- Usability improvements - Improve the ease of use of connectors, such as simplifying out-of-the-box options and providing examples in DataStream API.

Ververica Platform is scheduled to support Flink CDC in version 2.11.

Acknowledgements:

Thanks to all 49 community contributors who contributed to version 2.3 of Flink CDC, especially the four maintainers of the community (Ruan Hang, Sun Jiabao, Gong Zhongqiang, Ren Qingsheng) who have done outstanding work for this release.

List of contributors:

01410172, Amber Moe, Dezhi Cai, Enoch, Hang Ruan, He Wang, Jiajia, Jiabao Sun, Junwang Zhao, Kyle Dong, Leonard Xu, Matrix42, Paul Lin, Qingsheng Ren, Qishang Zhong, Rinka, Sergey Nuyanzin, Tigran Manasyan, camelus, dujie, ehui, embcl, fbad, gongzhongqiang, hehuiyuan, hele.kc, hsldymq, jiabao.sun, legendtkl, leixin, leozlliang, lidoudou1993, lincoln lee, lxxawfl, lzshlzsh, molsion, molsionmo, pacino, rookiegao, skylines, sunny, vanliu, wangminchao, wangxiaojing, xieyi888, yurunchuan, zhmin, Ayang, Mo Xianbin

You may also like

A World Without Kafka

Discover why Apache Kafka is becoming outdated for real-time analytics an...

Introducing The Era of "Zero-State" Streaming Joins

Introducing the next evolution in streaming joins: Apache Fluss offers ze...

Ververica Platform 3.0: The Turning Point for Unified Streaming Data

End the batch vs streaming divide. Flink-powered lakehouse with 5-10× fas...

Introducing Apache Fluss™ on Ververica’s Unified Streaming Data Platform

Discover how Apache Fluss™ transforms Ververica's Unified Streaming Data ...