Global Bank Achieves 90% Cost Savings with Mainframe Offloading!

💡 What is Mainframe offloading?

Mainframe offloading is the process of reducing the workload on traditional mainframe systems by moving specific data processing tasks, applications, or analytics to more modern, scalable, and cost-effective platforms, including cloud-based systems, distributed computing environments, or streaming data platforms.

Real-World Results: Mainframe Offloading in Leading Banks

In 2025, the ability for businesses to act quickly, adapt seamlessly, and make decisions based on real-time data is no longer just a competitive edge; it's a fundamental requirement for survival. Yet despite the rapid pace of technological innovation, many global financial enterprises feel shackled to legacy mainframe systems.

This is problematic because mainframe architectures were originally designed in the era of batch processing, long before the advent of AI, instant insights, or cloud-native agility were even conceivable. And while these traditional mainframe systems are proven to be reliable and robust, they fundamentally cannot keep up with modern business needs. In this blog, we’ll explore why and how one major financial institution updated their mainframe systems into the modern era.

Innovation is constant for financial industry leaders, including a top-tier global bank that, without sacrificing the reliability of their legacy mainframe systems, recently successfully modernized its decades-old data infrastructure. By reimagining how to use their existing systems (rather than replacing them entirely) in just three weeks, the bank achieved transformative results, including:

- 90% reduction in mainframe processing costs

- 60% acceleration in job runtime

Figure One: Results of mainframe offloading at a major (unnamed) bank.

Even though this bank is keeping its identity private (for now), the story of how it achieved these results is worth sharing. Let’s dive into how they are able to accomplish these remarkable results and outcomes.

The Hidden Burdens of Legacy Mainframe Infrastructure

At the heart of this bank’s operations is a core IBM mainframe system that runs COBOL (Common Business-Oriented Language)-based batch jobs. Those jobs are responsible for processing several essential financial functions, including interest calculations, fraud detection, account status updates, and regulatory reporting. For years, this system has served reliably under moderate loads. But as customer volumes grow and digital expectations intensify, cracks are appearing in the foundation.

- Poor performance and stability: The nightly batch cycle, which once completed in a manageable window, now stretches to eight hours, composed of 30 tightly coupled steps. Typically, if any single step fails, the entire process has to be restarted from the beginning, leading to frequent delays, operational bottlenecks, and mounting pressure on IT teams. System instability is common, latency increases unpredictably, and recovery times are longer, all of which threaten service level agreements and business continuity.

- Delayed reporting and compliance: Beyond performance issues, these limitations also create serious strategic risks. Fraud detection, for example, can occur after transactions are finalized, leaving the bank exposed to losses during the gap between activity and analysis. Regulatory compliance also suffers, as delayed liquidity reporting makes it hard to meet tightening deadlines imposed by financial authorities.

- Rising MIPS costs: the reliance on MIPS (Millions of Instructions Per Second) drives up licensing fees and compute expenses exponentially. Meanwhile, maintaining and enhancing the system requires specialized skills in legacy technologies like COBOL, VSAM (Virtual Storage Access Method), and IMS (Information Management System). Finding talent that can support these technologies is hard among professionals (most opting for more modern technologies as a course of study), and as a result, talent is increasingly expensive to source and retain. Compounding these operational concerns is that in the past, modernization efforts were often avoided due to fears of disruption, complexity, or failure.

“Mainframe offloading isn’t about tearing down the past. It’s about building a bridge to the future, incrementally, one stream at a time.”

Customers Demand Real-Time, Always-on Banking

At the same time that this bank navigates its specific challenges, the entire financial sector is experiencing major disruptions. For example, several major banks, including Bank of America, Commonwealth Bank of Australia, ANZ, Royal Bank of Scotland, and NatWest, recently suffered repeated tech outages, locking customers out of online banking and disrupting critical services. These aren’t just glitches; they signal a deepening crisis of confidence in traditional banking infrastructure, fueling the rise of agile fintechs rapidly gaining market share. The fallout is severe, including:

- Eroding customer trust and deposit losses

- Business-critical payment delays

- Systemic risks across interconnected financial networks

- Growing pressure for government intervention

The bank knows taking action is critical. To future-proof its operations, it needs resilient, high-performance systems that ensure uninterrupted uptime, real-time responsiveness, and consistent performance, all at speed. A new mainframe is not an option, as they are too expensive, inflexible, and reliant on a shrinking talent pool. The team realizes that cloud-native technologies offer the scalability, cost-efficiency, and platform modernization that are needed in the current landscape.

But staying competitive means more than simple modernization. It requires always-on availability, customer-centric services, and real-time processing that is seamlessly integrated with existing legacy systems. Ultimately, they need a smarter solution that consists of enterprise-grade stream processing to deliver the speed, consistency, fault tolerance, security, and high availability required, all preferably in one unified solution.

The Smarter Path Forward: Strategic Mainframe Offloading

Rather than implement a risky "rip-and-replace" strategy (which is known to incur high costs, long timelines, and operational upheaval), the bank chooses a more elegant solution: strategic data offloading. Leveraging the mainframe as a secure, reliable source of truth, they begin to shift computationally intensive workloads to a modern, cloud-native streaming platform.

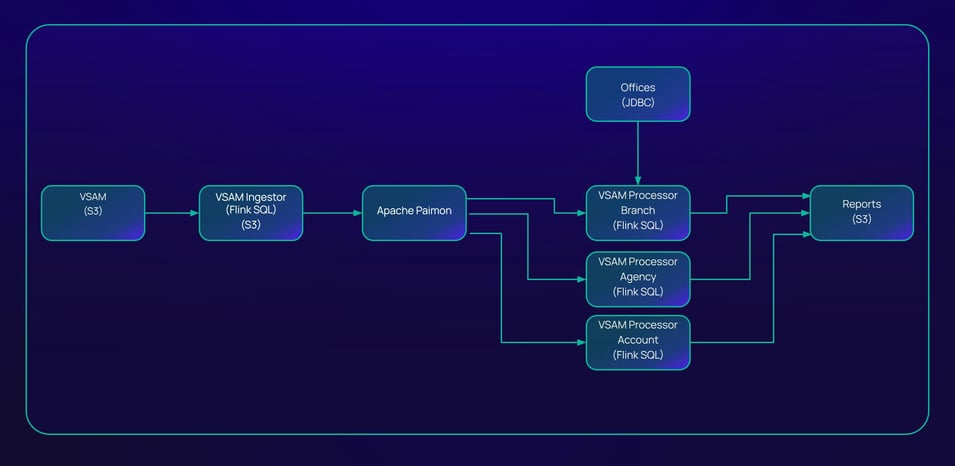

This is where Ververica steps in. In partnership, the bank deploys Ververica’s Unified Streaming Data Platform, powered by Apache Flink®. With this, they extract high-value data from the mainframe in real time and process it on the new, modern, scalable solution. This hybrid model allows the organization to preserve the integrity and resilience of its legacy infrastructure while unlocking new capabilities through real-time data processing. See Figure 2 for architecture details of the descriptions below.

This transformation centers around several key technical and architectural advancements:

- Efficient Data Export: Using JDBC connectors, the team extracts transactional data stored in VSAM files directly from the IBM mainframe. These exports are carefully orchestrated to minimize the impact on production workloads while ensuring completeness and consistency.

- Modern Codebase Migration: Critical business logic embedded in aging COBOL programs, such as rules for interest accrual or eligibility checks, is systematically refactored into Java, preserving functionality while making the codebase maintainable, testable, and extensible in a modern development environment.

- Seamless Cloud Integration: Processed streams are routed into Azure Blob Storage for durable storage and further downstream use. The architecture is built to integrate smoothly with Apache Kafka® for event-driven workflows, and the team has future plans to adopt Apache Paimon™ for efficient lakehouse-style analytics.

- Real-Time Stream Processing at Scale: Apache Flink, managed via Ververica’s enterprise-grade solution, enables the processing of millions of events per second with sub-second latency. This allows the bank to analyze transactions in real-time, enabling immediate responses to anomalies and opportunities alike.

Figure Two: Image of high-level architecture of bank's mainframe offloading solution.

This phased, non-disruptive approach also ensures zero downtime during migration. Crucially, it allows the bank to leverage existing cloud investments and avoid vendor lock-in, all while accelerating time-to-value. The entire deployment from design to full operation takes under three weeks, demonstrating that with Ververica’s Unified Streaming Data Platform, large-scale modernization doesn’t mean months of planning and execution.

Tangible Results from Mainframe Offloading: Speed, Savings, and Strategic Advantage

The impact of the transformation is both immediate and measurable:

|

Metric |

Before |

After |

Impact |

|

Nightly batch processing |

8+ hours |

~3 hours |

60% faster |

|

MIPS consumption |

Not disclosed |

90% saving |

Only 10% MIPS consumption |

|

Cost savings |

N/A |

>$1M/Year |

Immediate cost savings realized |

|

Business agility |

Reactive: dependent on overnight batch completion |

Near real-time |

Proactive: Business responds to events instantly |

By offloading resource-intensive computations to Ververica, the bank drastically reduces its dependence on costly mainframe cycles. Every percentage point reduction in MIPS usage translates directly into lower software licensing fees and hardware costs, delivering more than a million in cumulative savings annually. But the benefits extend far beyond the balance sheet. With access to real-time data streams, the bank fundamentally improves its operations:

- Fraud detection is now proactive instead of reactive. Suspicious patterns are recognized during transactions rather than after the fact, which significantly reduces exposure and improves customer protection and satisfaction.

- Interest calculations and balance updates are instantaneous, enhancing customer experience and trust.

- Regulatory reporting is now proactive instead of delayed. This results in greater transparency with oversight bodies, and better insight into potential problems before they happen.

These capabilities fix broken processes and open doors to new possibilities. Armed with real-time data pipelines, the bank is currently exploring advanced applications powered by artificial intelligence, including agentic AI models for adaptive fraud prevention and personalized, context-aware customer interactions.

Ververica: The Strategic Enabler for Mainframe Offloading

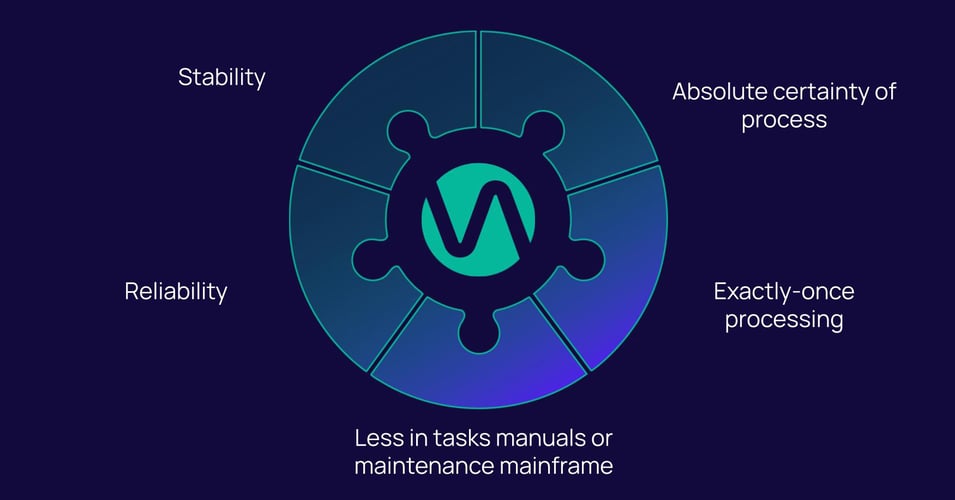

Selecting the right solution for mainframe system updates is critical. The bank recognizes the need for a solution that combines raw performance with enterprise-grade reliability, security, and ease of integration. Ververica stands out among other established alternatives for several reasons:

- Ververica’s Unified Streaming Data Platform is powered by VERA, the high-throughput stream processing engine that can handle massive data volumes with predictable low latency.

- Exactly-once semantics: in the event of failures, each event in the stream is processed exactly once, ensuring data consistency and accurate results, with no exception.

- The elastic, cloud-native architecture scales automatically with demand, reducing both cost and manual oversight.

- Built-in enterprise scheduling, monitoring, and observability tools provide operations teams with comprehensive visibility and control.

- Native support for integrating with legacy environments makes bridging old and new systems seamless.

- Available as a managed service, Ververica reduces operational overhead, allowing internal teams to focus on innovation instead of infrastructure maintenance.

Most importantly, Ververica has a proven track record, including a highly credible customer base in mission-critical finance environments, where accuracy, consistency, and uptime are non-negotiable.

Figure Three: Benefits of utilizing Ververica for mainframe offloading projects.

The Road Ahead: Evolving into a Real-Time Financial Enterprise

The success of this initiative has sparked a broader cultural and technological shift across the organization. What started as a targeted optimization project is now evolving into a company-wide movement towards real-time operations.

Figure Four: Additional real-time projects under consideration.

Future plans involve migrating the remaining batch processes to continuous, unbounded streaming architectures, which build on already demonstrated cost savings and operational benefits. By leveraging Change Data Capture (CDC), the bank can achieve real-time synchronization between the mainframe and external systems, similar to implementations seen with several of Ververica’s other existing customers. This enables instant updates to accounts, balances, and customer profiles across dashboards, risk engines, and compliance tools, eliminating data latency and empowering real-time decision-making.

Additionally, the foundation is now in place within the company to develop AI-driven decision engines capable of automating complex risk assessments and personalized customer recommendations. As these capabilities mature and the need to write processed data back to the mainframe diminishes, the mainframe’s workload will further reduce, accelerating the path toward a more agile, scalable, and future-ready architecture.

As one of the region’s most forward-thinking banks, this institution is now setting the standard for what modern banking can look like: responsive, intelligent, and driven by data flowing freely across all types of systems, regardless of source.

Conclusion: Legacy Modernization is Imperative for Success

Mainframes aren’t becoming extinct, nor should they. Their reliability, security, and transactional integrity remain unmatched for many core banking functions. But when used as the sole engine for analytics, reporting, and real-time decision-making, they become bottlenecks in a world that demands speed and agility.

This bank’s journey proves there’s a smarter way, one which preserves the strengths of legacy systems while augmenting them with modern, real-time platforms. By strategically offloading data and computation, organizations can achieve dramatic cost reductions, accelerate processing, and unlock innovation, all without compromising stability.

For any enterprise weighed down by aging infrastructure, the message is clear: transformation isn’t about tearing down the past. It’s about building a bridge to the future, incrementally, one stream at a time.

Related Resources:

- Learn more about the many data use cases Ververica helps solve.

FAQs:

What workloads are the best candidates for offloading from the mainframe to modern platforms?

In banking and finance, migrating batch processes to real-time is particularly impactful for use cases such as transaction processing, fraud detection, payment processing, and real-time gross settlement systems. Beyond finance, numerous industries also can benefit from real-time transformation, including (but not limited to): customer relationship management, inventory management, patient record updates, insurance claims processing, customer data analytics, airline reservations, and telecommunications: where responsiveness, accuracy, and operational agility are critical.

Ververica enables the seamless extraction and processing of streaming data from mainframe sources allowing banks to move time-sensitive, event-driven workloads off the mainframe. This reduces mainframe MIPS usage while enabling real-time insights and responsiveness.

How can we ensure data consistency and integrity when moving processes from the mainframe to distributed systems?

Ververica is built on Apache Flink which provides exactly-once processing semantics ensuring no data loss or duplication, even during failures. This guarantees consistency and correctness when replicating or transforming data streams from mainframe systems. Combined with checkpointing and stateful processing, Ververica maintains data integrity across distributed environments, matching the reliability expected from mainframe-grade operations

What are the cost implications of offloading versus continuing to maintain or scale mainframe infrastructure?

Mainframes are expensive to scale due to MIPS-based licensing and specialized hardware. By offloading event-driven workloads to Ververica running on cloud or on-prem Kubernetes, organizations achieve significant cost savings. Ververica reduces the dependency on mainframe compute cycles while enabling elastic, pay-as-you-go scaling on commodity infrastructure, lowering TCO and freeing budget for innovation.

How do we integrate offloaded applications with existing legacy systems without creating fragile, hard-to-maintain interfaces?

Ververica acts as a real-time integration layer between mainframes and modern systems. It supports native connectors (for Kafka and several databases) and integrates with Flink CDC to ingest data streams from mainframe database transaction logs. Its stream processing pipelines transform, enrich, and route data seamlessly. With declarative SQL and Java/Python APIs, workflows are maintainable, observable, and resilient replacing brittle batch ETL processes with robust, event-driven integration.

What security and compliance challenges arise when moving sensitive workloads off the mainframe, and how can they be mitigated?

Ververica delivers enterprise-grade security required for financial workloads: End-to-end encryption (in motion and at rest) Role-based access control (RBAC) and integration with LDAP/SSO Audit logging and data lineage tracking GDPR/CCPA compliance via data masking and retention policies. By centralizing secure stream processing, Ververica ensures sensitive financial data remains protected, even as it moves beyond the mainframe perimeter.

Which cloud-native technologies are best suited to replace mainframe functions effectively?

Ververica’s Unified Streaming Data Platform is built for cloud-native, Kubernetes-based environments, making it ideal for modernizing mainframe workloads. It leverages Apache Flink for stateful, low-latency stream processing, Kubernetes for elasticity and resilience, microservices architecture for modularity and scalability CI/CD pipelines for rapid, reliable deployment. This stack enables banks to replace rigid, batch-oriented mainframe processes with agile, real-time, always-on services aligned with fintech-grade architectures.

How can we measure the success of mainframe offloading in terms of performance, scalability, and business agility?

Ververica provides real-time observability and monitoring through dashboards, metrics, and alerts. Success can be measured by measuring the reduced mainframe MIPS consumption (direct cost savings) sub-second processing latency for critical workflows, system uptime and fault recovery time (rapid restart via state snapshots), throughput scalability under peak loads (e.g.end-of-day batch surges) time-to-market for new real-time services. instant fraud alerts).

You may also like

Data Sovereignty Is Existential Most Platforms Treat It Like a Feature

DORA and NIS2 demand provable data sovereignty. Most streaming platforms ...

Dual Pipelines Are Done. Ververica Unifies Batch and Streaming.

Ververica unifies batch and streaming data execution, eliminating pipelin...

A World Without Kafka

Discover why Apache Kafka is becoming outdated for real-time analytics an...

Introducing The Era of "Zero-State" Streaming Joins

Introducing the next evolution in streaming joins: Apache Fluss offers ze...