Oceanus: A one-stop platform for real time stream processing powered by Apache Flink

In recent years, the increasing need for timeliness, together with advances in software and hardware technologies, drive the emergence of real-time stream processing. Real-time stream processing allows public and private organizations to monitor collected information, make rapid decisions, tweak production processes, and ultimately gain competitive advantages. To satisfy the need for accessible real-time stream processing, we built Oceanus, a one-stop platform for real-time stream processing. Oceanus deploys Apache FlinkⓇ as its execution engine, hence realizing the benefits brought by Flink. Additionally, Oceanus provides efficient management for all stages in a real-time application’s lifecycle, namely development, testing, deployment, and operating. These functionalities significantly improve the efficiency of real-time applications in production.

Introduction

Tencent users generate a lot of data every day, which is a huge asset for us. In return, Tencent leverages the data every day in both strategic and operational decisions to better serve users. Recent efforts in Big Data allow us to process data at large scale, but that is far from satisfactory. As the value of data vanishes over time, it’s also critical to obtain timely results from the evolving world.

The Big Data team (http://data.qq.com) is responsible for building an efficient and reliable infrastructure for data collection, analytics, and serving at Tencent. Every day we process 17 trillion messages, whose size is approximately 3PB at disk after compression. In extreme cases, the peak throughput reaches 210 million per second. With such a huge scale and a large number of serving business units the team faces some great challenges that can be summarized below:

-

With diverse application scenarios in different business units, the requirements for real-time applications vary significantly.

-

Given the large amount of data and the number of jobs, we have to allocate computing resources efficiently and reasonably;

-

We have to provide high-throughput and low-latency computation while tolerating failures in production.

In order to meet the above challenges and help our customers gain timely insight in a fast-evolving manner, we built a one-stop platform for real-time stream processing called Oceanus. Oceanus deploys Apache FlinkⓇ as its execution engine and provides efficient management for the life cycle of Flink jobs.

We chose Flink as the execution engine because of its excellent performance and powerful programming interfaces. Prior to that, our real-time applications were running on a real-time computing platform based on Apache Storm. While working with Storm we were struggling with some drawbacks such as:

-

Apache Storm’s API is very low-level and requires a lot of effort to develop real-time applications. Users must have knowledge of the computing framework to ensure the correctness of their programs.

-

Due to lack of support for windows, users have to deal with out-of-order records by themselves, which is a tedious and error-prone task.

-

There is no built-in support for state. Users have to manually take care of the storage of state and its distribution under distributed settings. Very often, users store their state in external distributed storage, e.g. HBase and MySQL. The performance is naturally degraded by remote accesses in these cases.

-

It’s difficult to achieve EXACTLY-ONCE message transmission and computation in cases of failure.

Currently, we have migrated most of our Storm jobs to Flink. The number of real-time computations performed at Oceanus reaches 20 trillion times per day!

Introduction to Oceanus

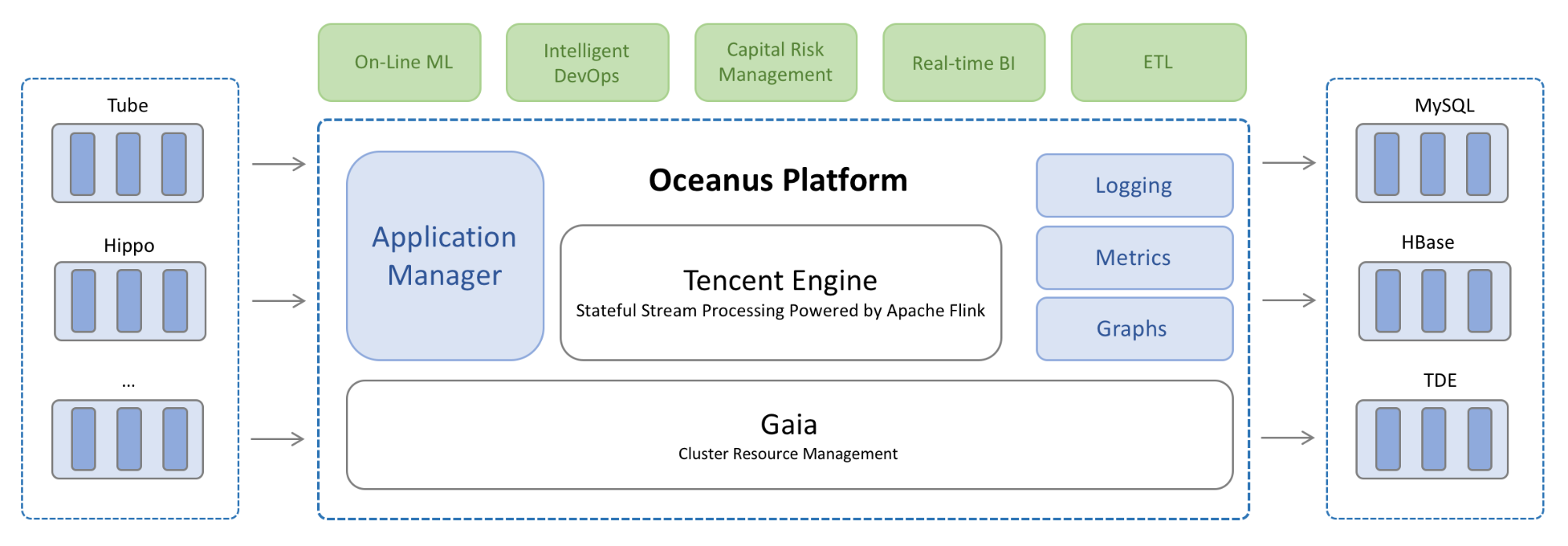

Oceanus is built to improve the efficiency of the development and operations of real-time applications, e.g., online ML, ETL, and real-time BI. The architecture of Oceanus is illustrated in the following figure.

In typical cases, users read events from external storage like Tube, MySQL, and HBase, process these events with Oceanus and finally write results back to external storages. Oceanus deploys Apache FlinkⓇ as its execution engine and facilitates the development of Flink jobs with flexible programming interfaces. To achieve good performance with high resource utility, Oceanus executes all Flink jobs on Gaia, a resource management system built on top of Yarn. With efficient logging, metrics and visualization tools, users can effectively operate and monitor their jobs with Oceanus.

Flexible programming interfaces

Oceanus provides a variety of methods to develop real-time applications, namely Canvas, SQL and JAR.

Most users can easily develop their real-time applications with canvas. Oceanus provisions a set of common operators. Users can develop their applications by dragging and connecting these operators. With Canvas, users can focus on their application logic, without the need to understand any underlying implementation details.

Users familiar with SQL can write their applications with Flink SQL. Because Flink SQL follows SQL standards, users can easily adapt their applications, originally written for batch processing, to data streams. To further improve the efficiency of developing SQL programs, Oceanus provides a set of methods such as:

-

Syntax highlight and automatic completion

-

Fuzzy matching of the names of tables, fields and functions.

-

One-click code formatting

-

One-click code verification

Given the limited expressive power of Canvas and SQL, Oceanus allows users to develop their applications with the imperative DataStream interfaces. Users can use DataStream to deal with complex logic and perform aggressive optimizations. They can then run their DataStream programs on Oceanus by simply uploading the JARs of their applications.

Visualization of computing results

Oceanus provides two methods to visualize the computing results. Firstly, Oceanus samples computing results and demonstrates them in web pages. This method is very suitable for users who are testing their programs. They can validate the correctness by simply comparing these results with the expected ones.

Oceanus also allows the visualization of computing results with Xiaoma Dashboard, a visualization tool developed by Tencent. Users can easily build their dashboards to make the results understandable.

Quick verification of programs

With the visualization of computing results, users can quickly verify their programs by comparing the expected with the actual results. Users can populate the testing data by randomly generated data by Oceanus or by uploading their own data. To achieve more realistic results, they can also test their programs with the data sampled in production.

Easy deployment of applications

Oceanus frees users from the burdens of resource allocation and application deployment. Oceanus employs Gaia, a resource management system built on top of Yarn, to manage resources and schedule jobs. Users can configure their jobs in Oceanus and submit them with one click. Oceanus will then calculate the required resources and deploy the jobs in production with Gaia. With the checkpointing mechanism provided by Flink and the scheduling mechanism provisioned by Gaia, users can change the parallelism of jobs dynamically.

Rich runtime metrics

Oceanus collects a lot of runtime metrics of Flink jobs and writes collected metrics into Tube, a message queue service at Tencent. These metrics are then aggregated and displayed on web pages. With these metrics, users can better operate their applications and easily find the causes of failures or exceptions.

Improvements to Flink

The Big Data team at Tencent makes a lot of efforts to enhance the functionality and reliability of Flink, some of which are described below:

-

Provide more than 30 functions for Table API and SQL

-

Allow the handling of time-out events in AsyncIO operators

-

Allow flexible processing of late records in window operators

-

Enhance the reliability with the reconciling of Job Masters

The Tencent team is very active in the Flink community, having contributed approximately 100 pull requests with this number definitely set to increase.

In the near future, we will continue our work on Flink to enhance its functionality and reliability. We will also provide more functionality to Oceanus to facilitate the development of real-time applications, by building an effective framework for online learning on top of Oceanus as an example.

Apache Flink, Flink®, Apache®, the squirrel logo, and the Apache feather logo are either registered trademarks or trademarks of The Apache Software Foundation.

You may also like

Introducing The Era of "Zero-State" Streaming Joins

Introducing the next evolution in streaming joins: Apache Fluss offers ze...

Ververica Platform 3.0: The Turning Point for Unified Streaming Data

End the batch vs streaming divide. Flink-powered lakehouse with 5-10× fas...

Introducing Apache Fluss™ on Ververica’s Unified Streaming Data Platform

Discover how Apache Fluss™ transforms Ververica's Unified Streaming Data ...

VERA-X: Introducing the First Native Vectorized Apache Flink® Engine

Discover VERA-X, the groundbreaking native vectorized engine for Apache F...