The transformation of (open source) data processing

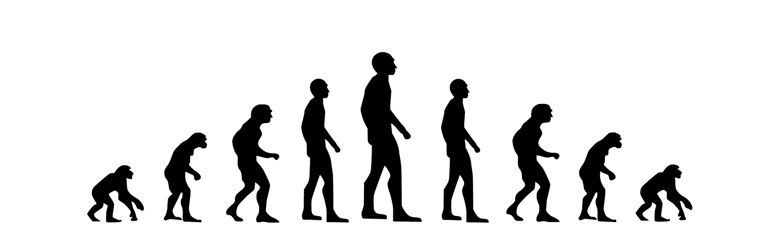

This blog post is based on a talk I gave at the Big Data Spain Conference 2018 in Madrid. The post goes through how [open source] data processing has evolved and covers the different technologies and how theyprogressed over time asdifferent frameworksbecome more sophisticated and the amount and speed that data is generated increases by the hour.

The blog post tries to answer the following two questions: How can we process data? and What are the data processing systems available to us today? The “why” of data processing is pretty obvious if we consider the vast amount of sensors, connected devices, online website or mobile application visitors and all the data generated by both humans and machines alike. The need for data processing has been persistentfrom the moment computers were invented and humans had access to some available data. Let’s look at the evolutionary steps of data processing in the paragraphs below:

The “pre-history” of Big Data

With the invention of computers came a clear need for information and data processing. During these very early days, computer scientists had to write custom programs for processing data and most likely put those programs in a punch card. The next steps bring assembly language and more purposeful programming languages like Fortran, then C and Java. Essentially from the prehistoric big data space, software engineers would use these languages to write purpose-built programs for specific data processing tasks. However, this data processing paradigm was only accessible to the select few that had a programming background preventing wider adoption by data analysts or the business community that wanted to process information and make specific decisions.

As a natural next step comes the invention of the database around the 1970s (this can be argued). Traditional relational database systems such as IBM’s databases enabled SQL and increased the adoption of data processing to wider audiences. SQL is a standardized and expressive query language that reads somewhat like English and gives access to data processing to more people that no longer have to rely on a programmer to write special case-by-case programs and analyze data. SQL also expanded the number and type of applications relevant to data processing such as business applications, analytics on churn rates,average basket size, year-on-year growth figures etc.

The early days of Big Data

The era of Big Data begins with the MapReduce paper released by Google that explains a simple model based on the two primitives Map and Reduce (which are second-order functions) that allow for parallel computations across a massive amount of parallel machines. Obviously, parallel computations were possible even before the MapReduce era through multiple computers, supercomputers, and MPI systems. However, MapReduce made it available to a wider audience.

Then came Apache Hadoop as an open-source implementation of the framework (initially implemented at Yahoo!) that was then widely available in the open source space and accessible by a wider audience to use. Hadoop saw a widespread adoption by different companies and many Big Data players originated around the Hadoop framework (Cloudera or Hortonworks). Hadoop brought a new paradigm in the data processing space: one where you can store data in a distributed file system or storage (such as HDFS for Hadoop) and then at a later point you can start asking questions on this data (querying on data). The Hadoop space saw a similar path to relational databases (history always repeats itself) where the first step included custom programming by a specific “cast” of individuals who were able to write programs to then implementing SQL queries on data in a distributed file system such as Hive or other storage frameworks.

The next level in batch processing

The next step for Big Data comes with the introduction of Apache Spark. Spark allowed additional parallelization and brought batch processing to the next level. As we mentioned earlier, batch processing includes putting data in a storage system that you then schedule computations on. The main concept here is that your data sits somewhere while you periodically (daily, weekly, hourly) run computations to see results based on past information. These computations don’t run continuously and have a start and an end. As a result, you have to re-run them on an ongoing basis for up-to-date results.

Evolving from Big Data to Fast Data: Stream processing

While going over the next step in Big Data evolution, we experience the introduction of stream processing with Apache Storm as the first widely used framework (there were other research systems and frameworks at the same time but Storm was the one to see increased adoption). What this framework enables is writing programs that are running continuously (24/7). So contrary to the batch processing approach where your programs and applications have a beginning and an end, with stream processing your program runs continuously on data and produce outcomes in real-time, while the data is generated. Stream processing was further advanced with the introduction of Apache Kafka (originated at LinkedIn) as a storage mechanism for a stream of messages. Kafka acted as a buffer between your data sources and the processing system (like Apache Storm).

A slight detour in the evolution of Big Data was the so-called “Lambda architecture”. This architecture originated because the initial adopters of stream processing did not believe that stream processing systems like Apache Storm were reliable enough so they kept both systems (batch and stream processing) running simultaneously. The Lambda Architecture includes a combination of both systems, whereby a stream processing system like Apache Storm is being utilized for real-time insights but then the architecture periodically uses a batch processing system that maintains the ground truth of what’s happened. At this point in time, stream processing is used only for immediate action or analytics but not for ground source-of-truth.

Making stream processing accessible: Apache Flink

The landscape arguably changed around 2015 when Apache Flink started becoming a prominent stream processing framework that developers and data and analytics leaders started adopting. From the very early days, Flink had very strong guarantees, exactly-once semantics and a fault-tolerant processing engine that made users believe that the Lambda architecture was not a necessity anymore and that stream processing could be trusted for complex event processing and continuous-running, mission-critical applications. All the overhead that came with building and maintaining two systems: a batch and a stream processing system could now go away as Flink introduced a reliable and accessible data processing framework.

Stream processing introduces a new paradigm and change in mindset from a request-response way where data is stored before you can ask questions on potential fraud case (as mentioned earlier) to one where you make the questions first and in real-time you get information as the data is generated.

For example, with stream processing, you can develop a fraud detection program that is running 24/7. It gets events in real time and gives you insight when there is credit card fraud, preventing it from actually happening in the first place. In my opinion, this is one of the bigger shifts in data processing because it allowed real-time insights into what is happening in the world.

Because history is repeating itself again, stream processing experienced the same path as relational databases and batch processing: one where the initial accessibility is restricted to a select audience of programmers that is then followed by the introduction of SQL the framework accessible to a bigger audience that does not necessarily know how to write code.

Conclusion

In our discussion about the evolution of (open source) data processing, we experience a common pattern: A new framework is introduced in the market (i.e. a relational database, batch processing, stream processing) that initially is available to a specific audience (programmers) who can write custom programs to process data. Then comes the introduction of SQL in the framework that makes it widely accessible to audiences that don’t need to write programs for sophisticated data processing. With the introduction of SQL in stream processing, we see wide adoption of the framework in the enterprise and we expect the market to grow exponentially. In the Flink Forward Berlin 2018 Conference, I gave a talk, together with my colleague Till Rohrmann, about the past, present, and future of Apache Flink that describes where Flink came from and some future directions we can expect to see in the framework with upcoming releases! I encourage you to register for Flink Forward San Francisco, April 1-2, 2019 for more talks and future directions of Apache Flink below.

Feel free to subscribe to the Apache Flink Mailing List for future updates and new releases or contact us for more information below!

You may also like

Data Sovereignty Is Existential Most Platforms Treat It Like a Feature

DORA and NIS2 demand provable data sovereignty. Most streaming platforms ...

Dual Pipelines Are Done. Ververica Unifies Batch and Streaming.

Ververica unifies batch and streaming data execution, eliminating pipelin...

A World Without Kafka

Discover why Apache Kafka is becoming outdated for real-time analytics an...

Introducing The Era of "Zero-State" Streaming Joins

Introducing the next evolution in streaming joins: Apache Fluss offers ze...